![[Header]](../XuShared2/Line3.jpeg)

Add a Comment (Go Up to OJB's Blog Page) Recursion: See RecursionEntry 1431, on 2012-08-28 at 21:36:06 (Rating 1, Computers) Today I listened to a Radio NZ podcast which discussed computer science. One of the topics they talked about was recursion, but in my humble opinion they didn't explain it very well. Many years ago I did a computer science degree and have worked as a computer consultant and programmer ever since so I wanted to offer my own contribution to the topic here: that is recursion and programming in general.

First I want to say why I love programming so much. To me it is the ideal combination of art and science. That suits my personality best because I am an analytical and precise person but I also like to be creative. There is no other profession that I know of which combines both of those elements in quite the same way. Writing a program involves solving a problem in an analytical way but many of the elements of creating a program also involve a lot of creativity and "beauty".

In programming (as in many fields where beauty seems a strange word to use as a description, such as maths) beauty refers more to the elegance, simplicity, and subtlety of a solution to a problem more than any outward manifestation of the item being created. In programming there is often an opportunity to create a visual interface which can be described as beautiful but that's not really what I am talking about here. It's deeper and more subtle than that.

When I write a program I don't just try to solve the initial problem, I try to make the solution extendable, tolerant of errors, fast, compact, and easy to understand. Usually a short program is a far more impressive achievement than a long one which has the same function. And every moderately complex problem has an infinite (or so close to infinite that it doesn't matter) number of possible solutions, some of which are elegant and beautiful and some which aren't.

Of course there is a certain amount of subjectivity in judging how good a program is but, as in most areas of expertise, skilled programmers will generally agree on what is good and what isn't.

Now getting back to recursion. First of all, what is it? Well it's a way to solve a problem by creating a series of steps (what computer scientists call an algorithm) and allowing that algorithm to refer to itself. The nerdy joke in the computer world is that if you look up recursion in the dictionary the definition will include "see recursion". There is also the little "in" jokes where some languages have recursive names. For example the name of the scripting language "PHP" is a recursive acronym for "PHP: Hypertext Preprocessor", and GNU is an acronym for Gnu's Not Unix.

The serious example given in the podcast was an algorithm to climb stairs which went like this: (I have given the following steps the name "climb")...

algorithm "climb":

go up one step

are you at the top?

- if no: "climb"

end (of climb)

You can see in this example that the algorithm called "climb" has a step which refers to itself (the last step "climb"). But this is a bad example because it could also be done like this...

start:

go up one step

are a you at the top?

- if no go back to "start"

end

This is what we call an iterative algorithm: it iterates or "loops" around until it stops at a particular step. Generally these are more efficient than recursive algorithms.

By the way, I realise that neither of these work properly if you start at the top of the steps already. That sort of thing is a common programming problem and one which is obvious and quite easy to fix here but often not in more complex algorithms.

So what about an example where recursion does make sense? There is a classic case often used in computing which involves processing a binary tree. So what is a binary tree? It's a structure in a computer's memory which contains information in a way which makes it easy to search, sort, and manipulate in other ways. Imagine a series of words and with each word are two links to two other words (or the link could be empty). The words are put in the tree so that the left link always goes to words alphabetically before the current word, and the right link to words after.

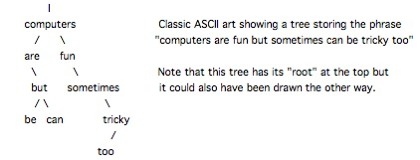

If the first word is "computers" for example and the second word is "are" the second word would be accessed from the left link from "computers". If the third word was "fun" then that would go on the right link from "computers". if the fourth word was "sometimes" it couldn't go on the right link from "computers" because "fun" is already there so it would go on the right link from "fun" instead ("s" comes after "f"). If the next word was "but" that would go right from "are". Continuing the sentence with the words "can be tricky too" we would get this...

Now let's say I wanted to display the words in alphabetical order. First I make a link pointing to the top of the tree. Now I create some steps called "sort" which I give a link to the current word (initially "computers"). Here are the steps...

Algorithm "sort" using "link":

Is there a left link at the word for the link you are given?

- if yes, "sort" with the left link

- if no:

-- display the current word

-- is there a right link?

---- if yes "sort" with the right link.

end (of sort)

That's it! That will display the words alphabetically. The first link points to "computers" but there is a left link so we send a link to that which points to "are". There is no left link so we print "are" and send the right link (to "but") to sort. There is a left link so we send that (to "be") to sort. There is no left link so it prints "be" and finds no right link. At that point the sort algorithm ends but the previous sort which caused this sort to start is still active so we go back to that. That sort pointed to "but" and we had just taken the left link, so we carry on from that and check the right link using sort. That takes the next sort to "can", etc...

The key thing here is that each time "sort" is used it sticks around until the end, so there can be any number of sorts waiting to continue where they left off before a "sort" further down the tree was started.

Wow, that sounds so complicated but it's really quite simple. I did all of this from memory and it's quite easy when you understand the concept. Without recursion sorting a binary tree would be difficult because there is no reverse link back up the tree and no easy way to remember what has already been done at each word. With recursion when one version of sort launches another its information is left behind and creates a way to go back up the tree when the one it started has finished running.

The recursive algorithm in this case is efficient and elegant. It's also very simple because all of the complexity that might be required otherwise is available as an intrinsic part of how recursion works. It's a simple example of "beauty" in programming.

There are no comments for this entry.

You can leave comments about this entry using this form. To add a comment: enter a name and email (both optional), type the number shown above, enter a comment, then click Add.

Note that you can leave the name blank if you want to remain anonymous.

Enter your email address to receive notifications of replies and updates to this entry.

The comment should appear immediately because the authorisation system is currently inactive.

![[Comments]](../XuShared/Comment1B.jpeg) ![[Preview]](../XuShared/Comment6B.jpeg) ![[Blog]](../XuShared/Up2B.jpeg)

|

![[Comments]](../XuShared/Comment1B.jpeg)

![[Preview]](../XuShared/Comment6B.jpeg)

![[Blog]](../XuShared/Up2B.jpeg)